StegoGAN: Leveraging Steganography for Non-Bijective Image-to-Image Translation

Published in CVPR, 2024

Recommended citation: Wu S. (2024). "StegoGAN: Leveraging Steganography for Non-Bijective Image-to-Image Translationy" CVPR. https://arxiv.org/pdf/2403.20142.pdf

Sidi Wu, Yizi Chen, Samuel Mermet, Lorenz Hurni, Konrad Schindler, Nicolas Gonthier and Loic Landrieu

Abstract

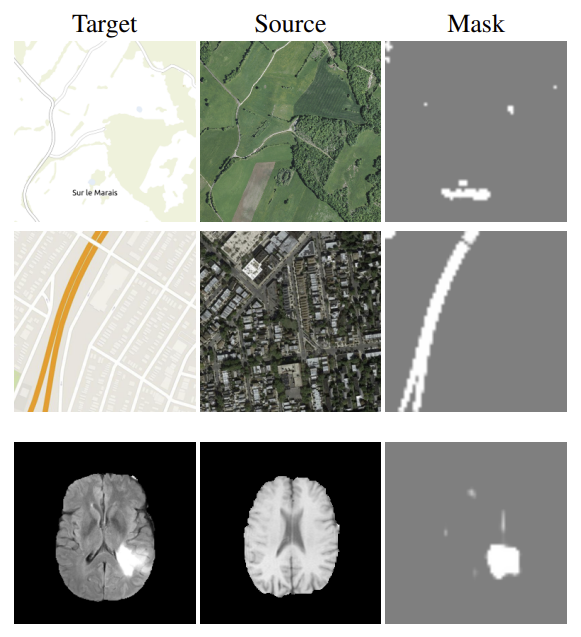

Most image-to-image translation models postulate that a unique correspondence exists between the semantic classes of the source and target domains. However, this assumption does not always hold in real-world scenarios due to divergent distributions, different class sets, and asymmetrical information representation. As conventional GANs attempt to generate images that match the distribution of the target domain, they may hallucinate spurious instances of classes absent from the source domain, thereby diminishing the usefulness and reliability of translated images. CycleGAN-based methods are also known to hide the mismatched information in the generated images to bypass cycle consistency objectives, a process known as steganography. In response to the challenge of non-bijective image translation, we introduce StegoGAN, a novel model that leverages steganography to prevent spurious features in generated images. Our approach enhances the semantic consistency of the translated images without requiring additional postprocessing or supervision. Our experimental evaluations demonstrate that StegoGAN outperforms existing GAN-based models across various non-bijective imageto-image translation tasks, both qualitatively and quantitatively.

Keywords

- Non-Bijective Image Translation

- Steganography

- GAN

Recommended citation: Wu S., Chen Y., Mermet S., Hurni L., Schindler K., Gonthier N. and Landrieu L. “StegoGAN: Leveraging Steganography for Non-Bijective Image-to-Image Translation” CVPR 2024.