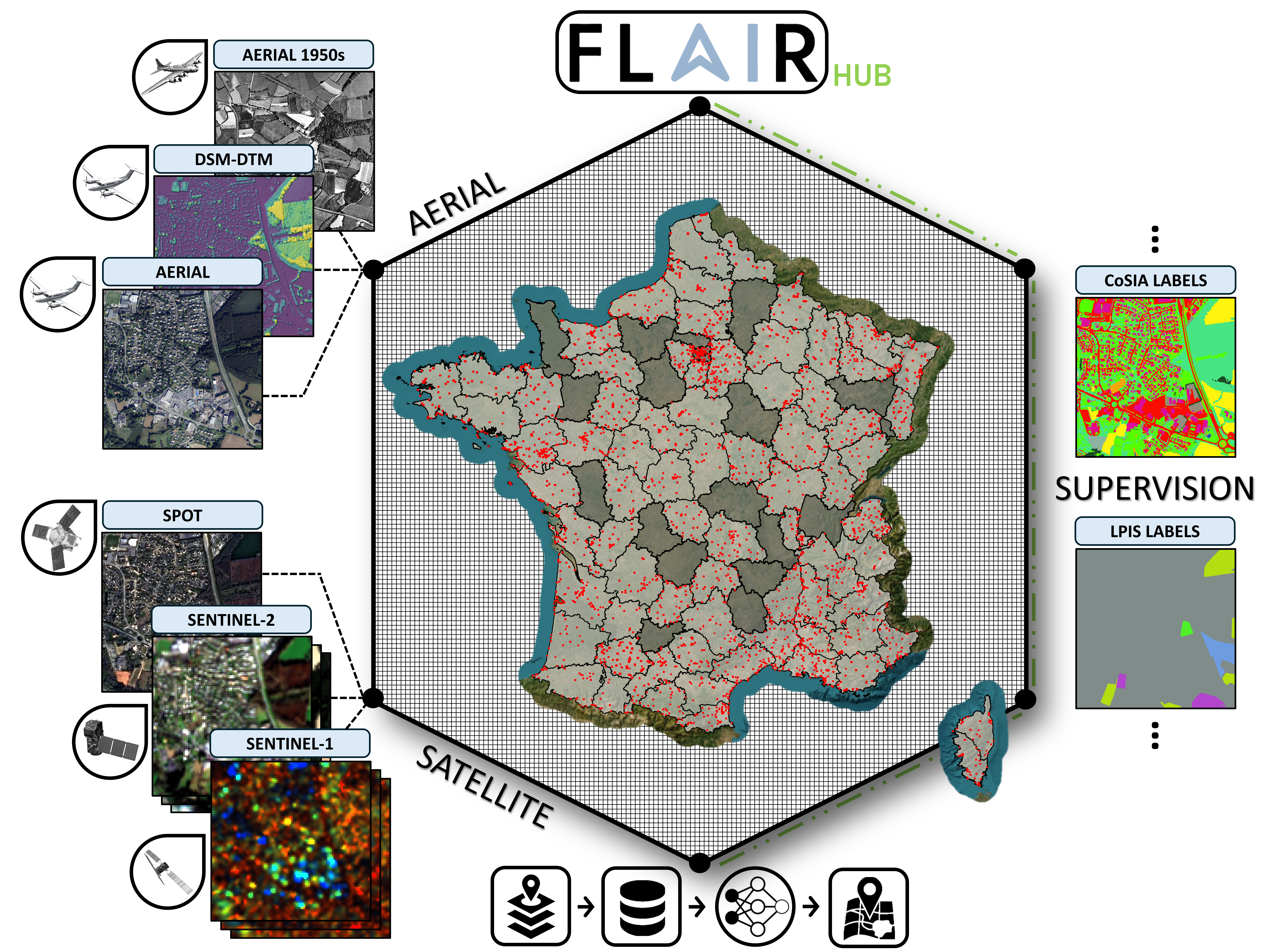

FLAIR-HUB: Large-scale Multimodal Dataset for Land Cover and Crop Mapping

Published in preprint, 2025

Recommended citation: Garioud A. (2025). "FLAIR-HUB: Large-scale Multimodal Dataset for Land Cover and Crop Mapping" preprint. https://arxiv.org/pdf/2506.07080.pdf

Anatol Garioud, Sébastien Giordano, Nicolas David and Nicolas Gonthier

PDF - Dataset - Pretrained Models

Abstract

The growing availability of high-quality Earth Observation (EO) data enables accurate global land cover and crop type monitoring. However, the volume and heterogeneity of these datasets pose major processing and annotation challenges. To address this, the French National Institute of Geographical and Forest Information (IGN) is actively exploring innovative strategies to exploit diverse EO data, which require large annotated datasets. IGN introduces FLAIR-HUB, the largest multi-sensor land cover dataset with very-high-resolution (20 cm) annotations, covering 2528 km2 of France. It combines six aligned modalities: aerial imagery, Sentinel-1/2 time series, SPOT imagery, topographic data, and historical aerial images. Extensive benchmarks evaluate multimodal fusion and deep learning models (CNNs, transformers) for land cover or crop mapping and also explore multi-task learning. Results underscore the complexity of multimodal fusion and fine-grained classification, with best land cover performance (78.2% accuracy, 65.8% mIoU) achieved using nearly all modalities. FLAIR-HUB supports supervised and multimodal pretraining, with data and code available.

Keywords

- Semantic Segmentation

- Multi Modal Learning

- Land cover

- Crop Mapping

- Aerial and Satellite Images

Recommended citation: Garioud A., Giordano S., David N. and Gonthier N. (2025). “FLAIR-HUB: Large-scale Multimodal Dataset for Land Cover and Crop Mapping” preprint.